Localization

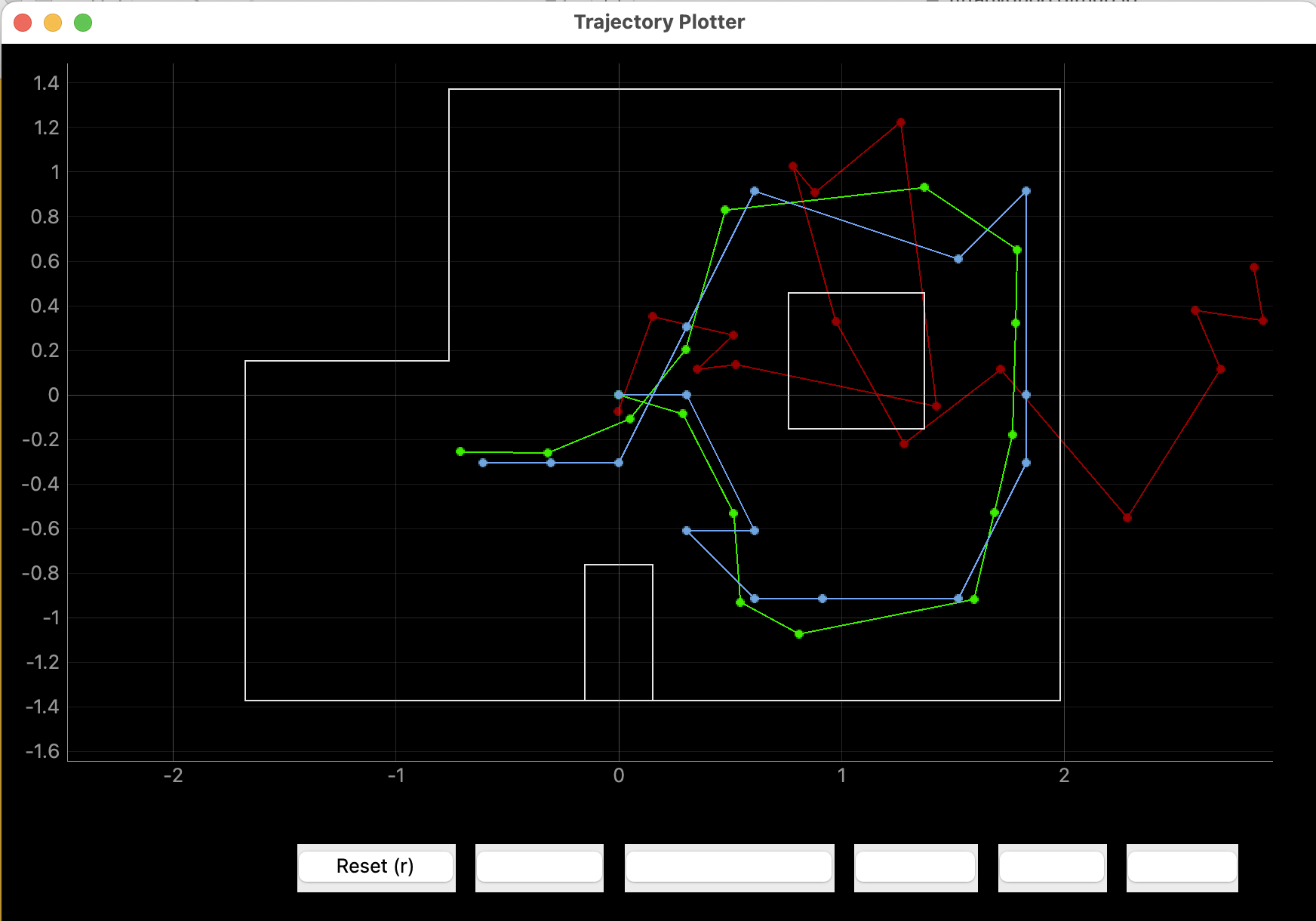

Using the new localization code, I placed my robot at the various marked poses in the lab and ran the routine

to take 18 sensor measurements and predict the robot's location. The results for each location are as follows:

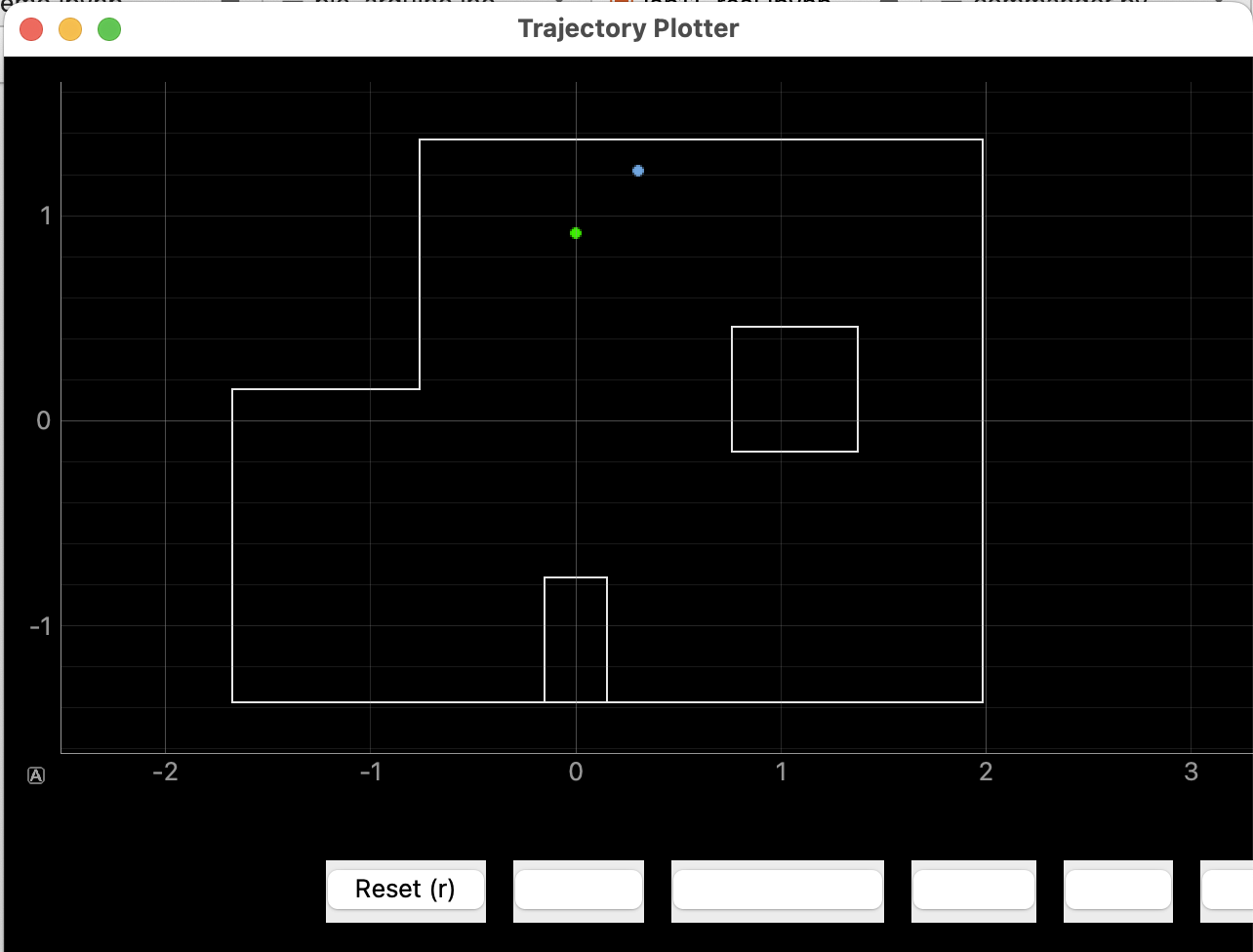

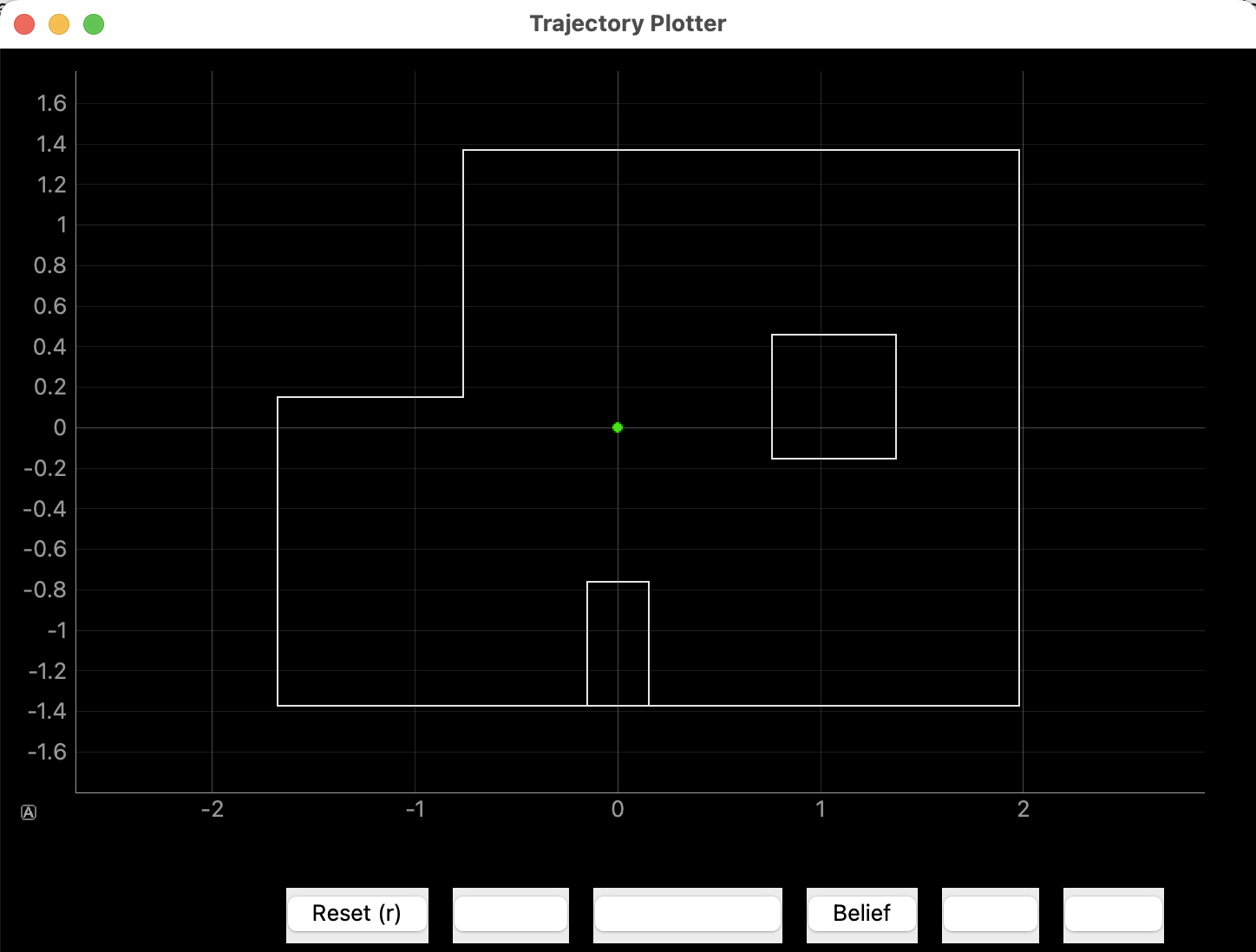

(0,0)

Although this pose is not one of the 4 included in the lab, my localization result matched perfectly with the ground truth,

so I had to include it :)

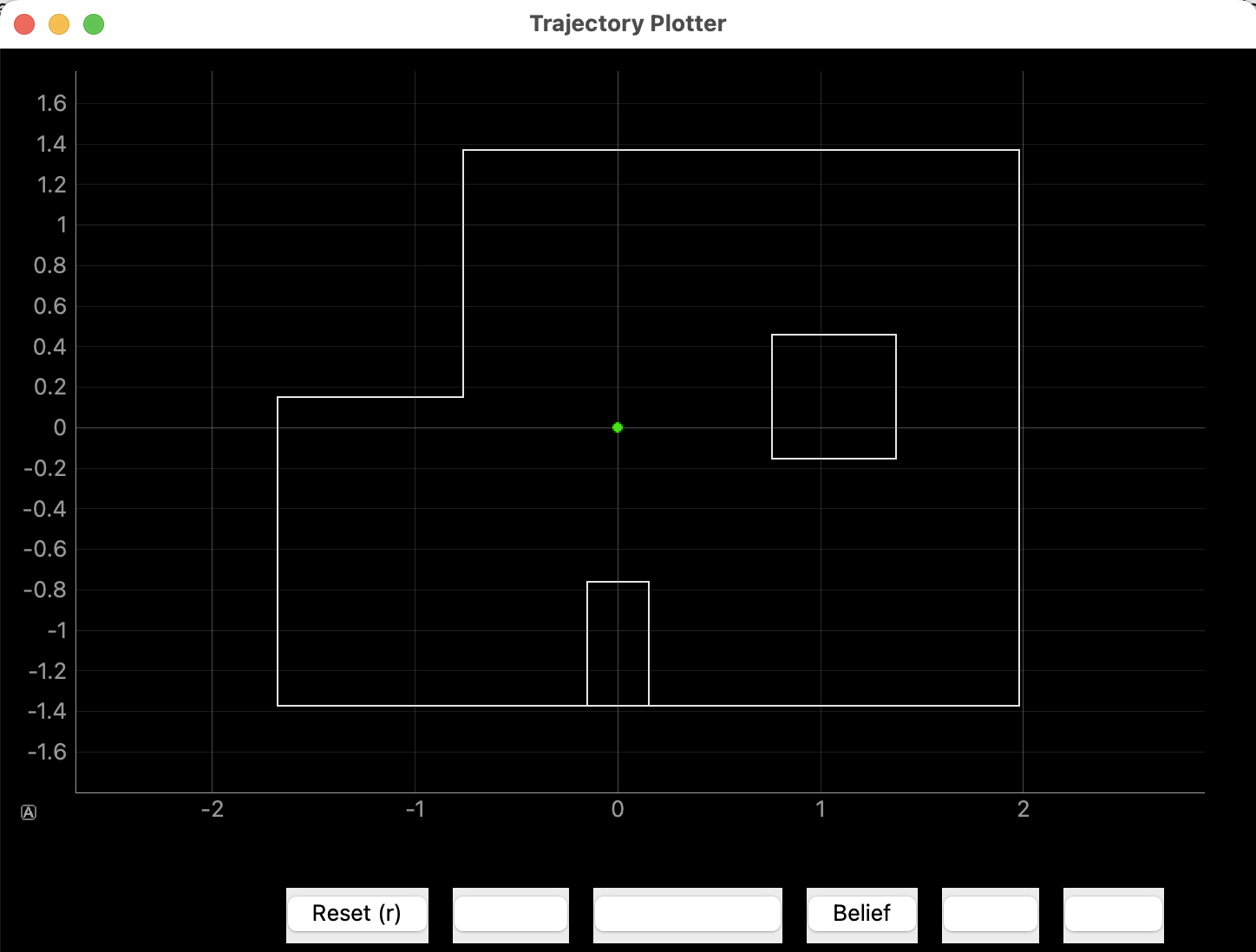

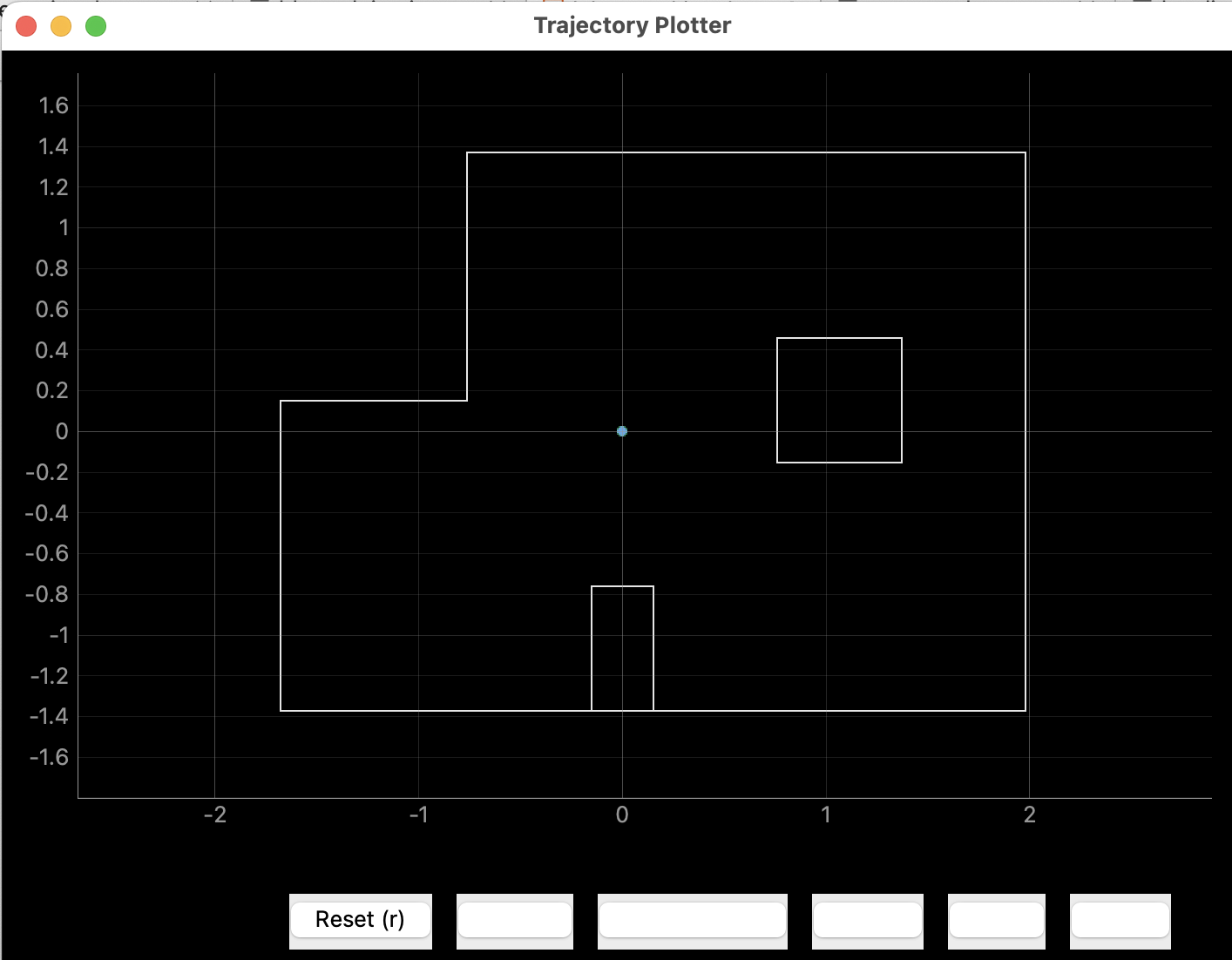

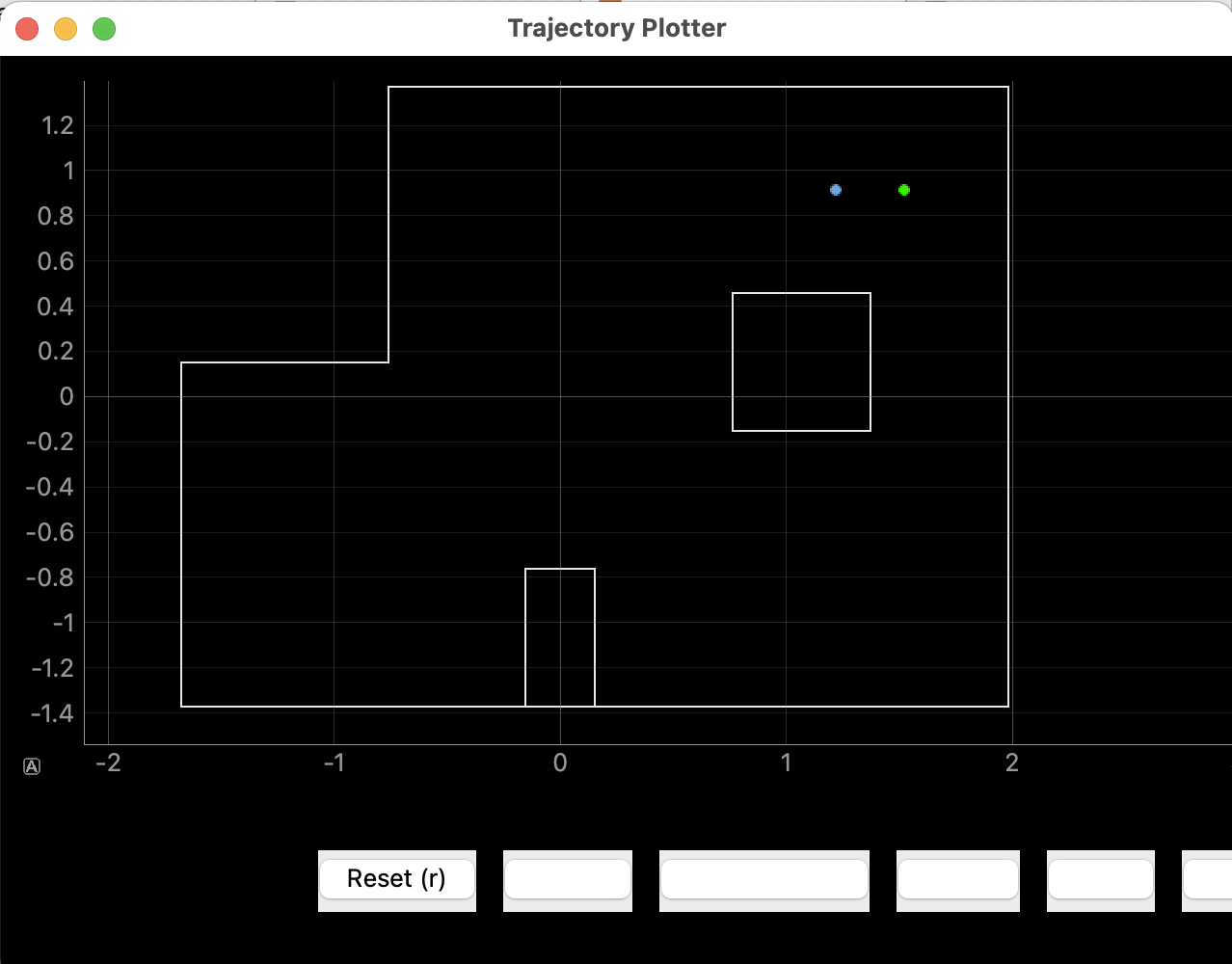

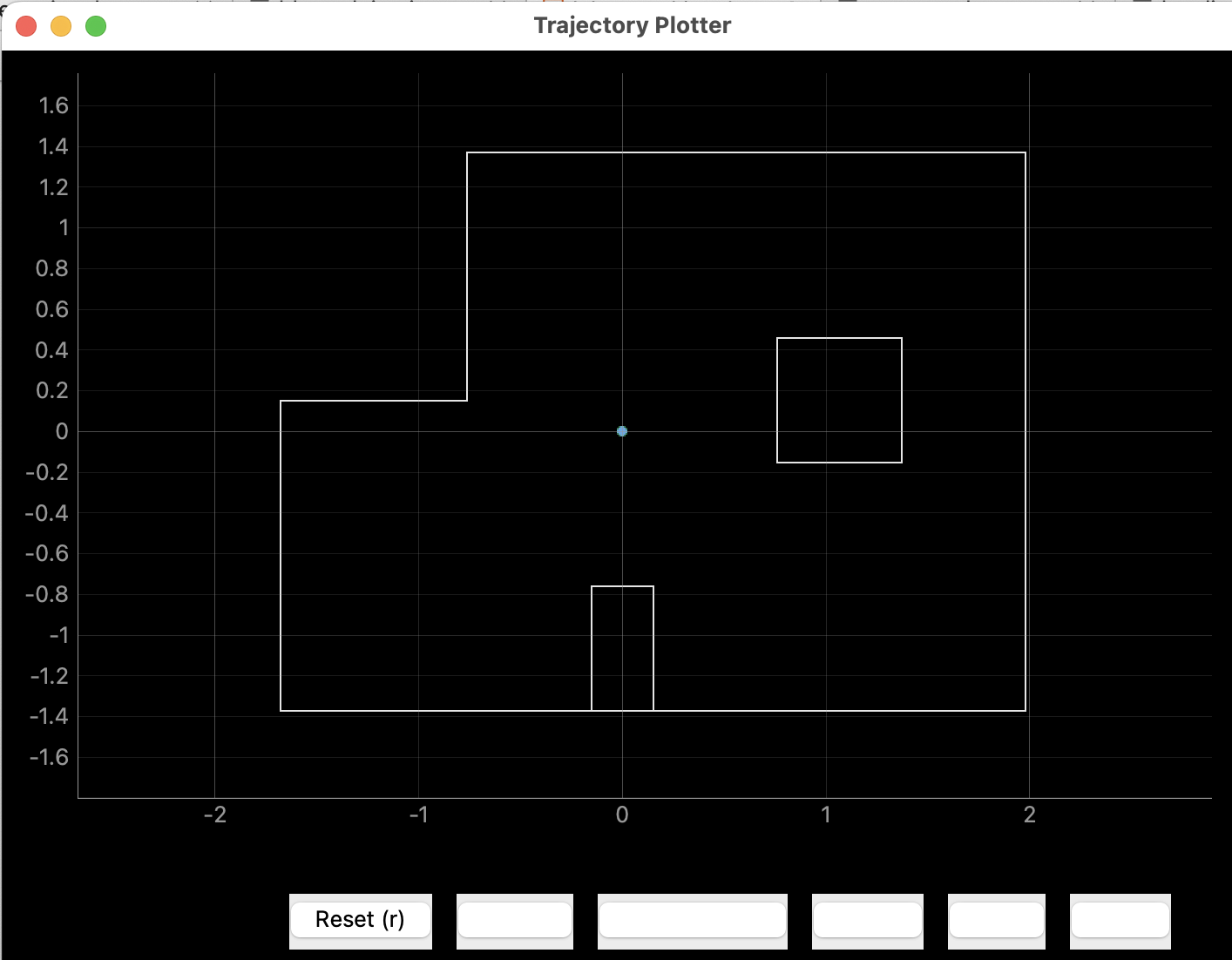

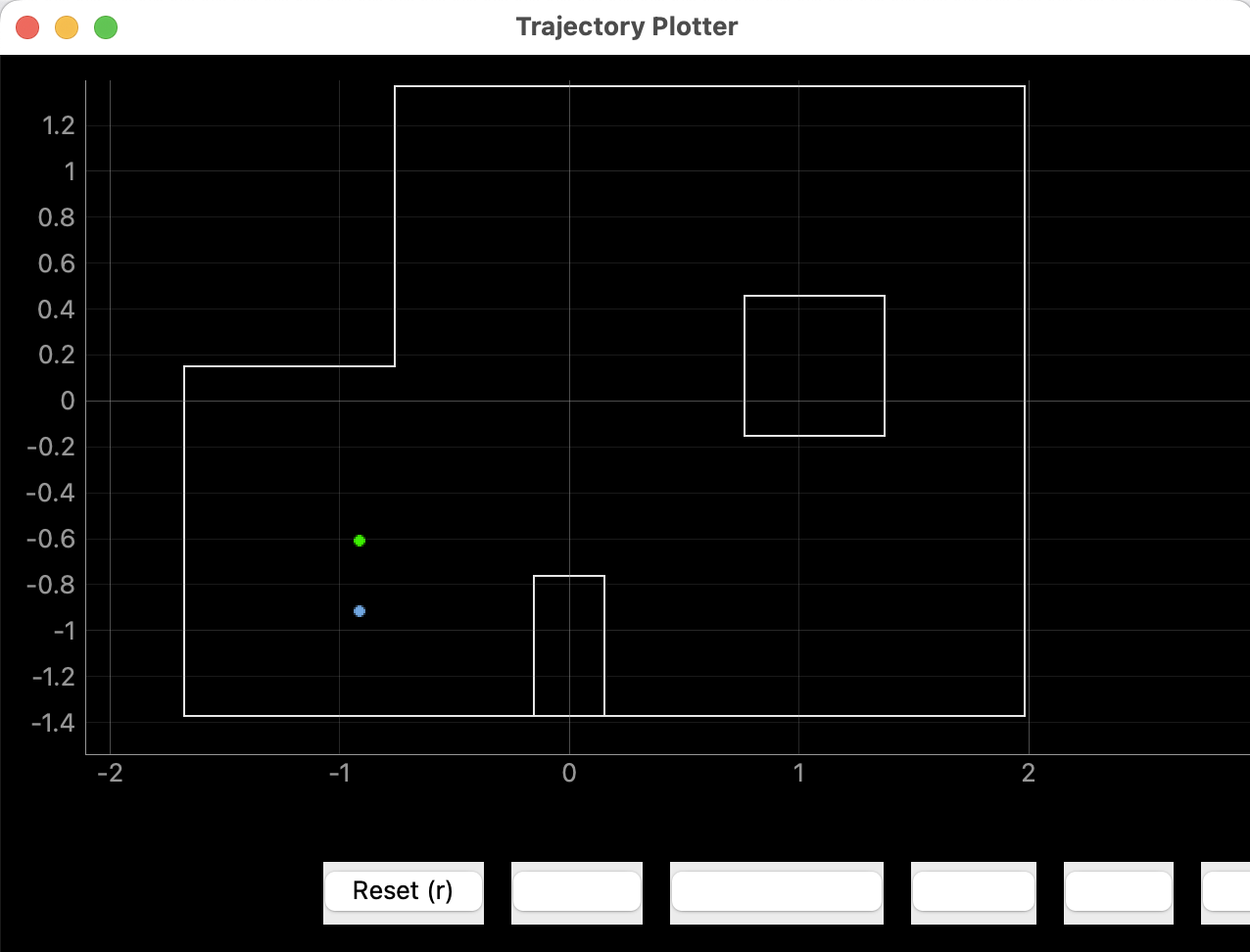

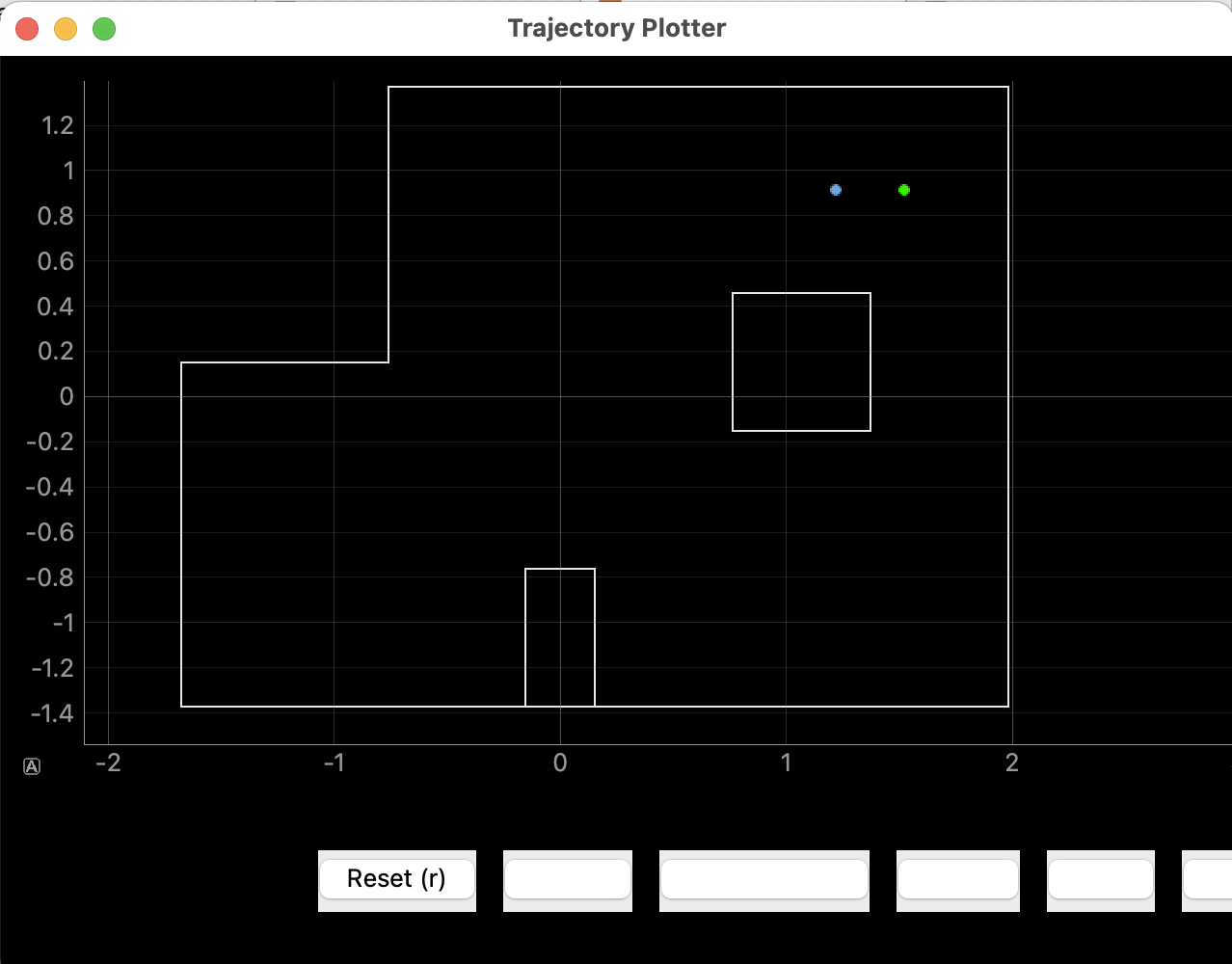

(-3,-2) and (0,3)

I was also able to get pretty good results for the above two points after many tries. The green dot represents the ground truth position,

and the blue dot is the localization result.

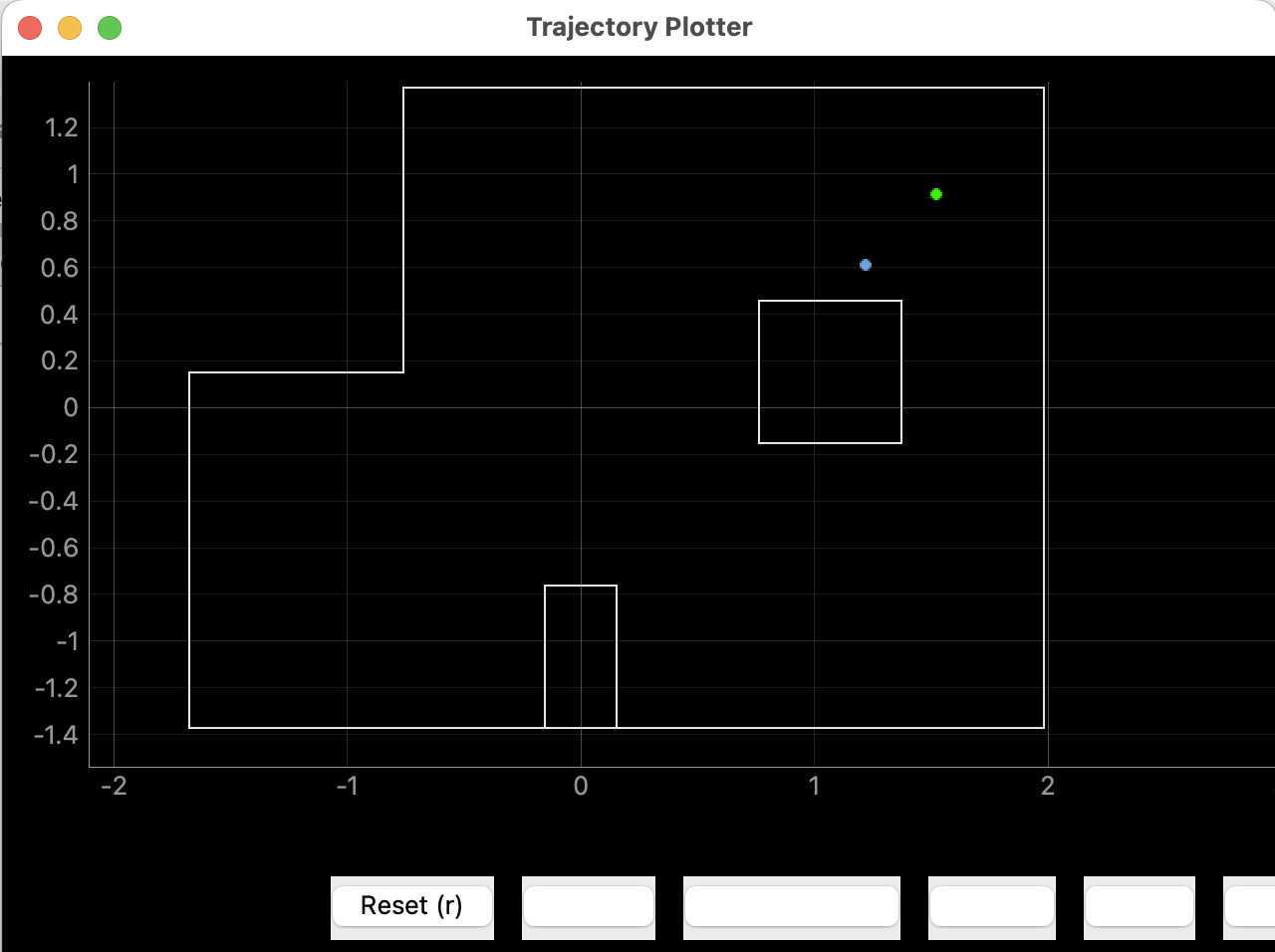

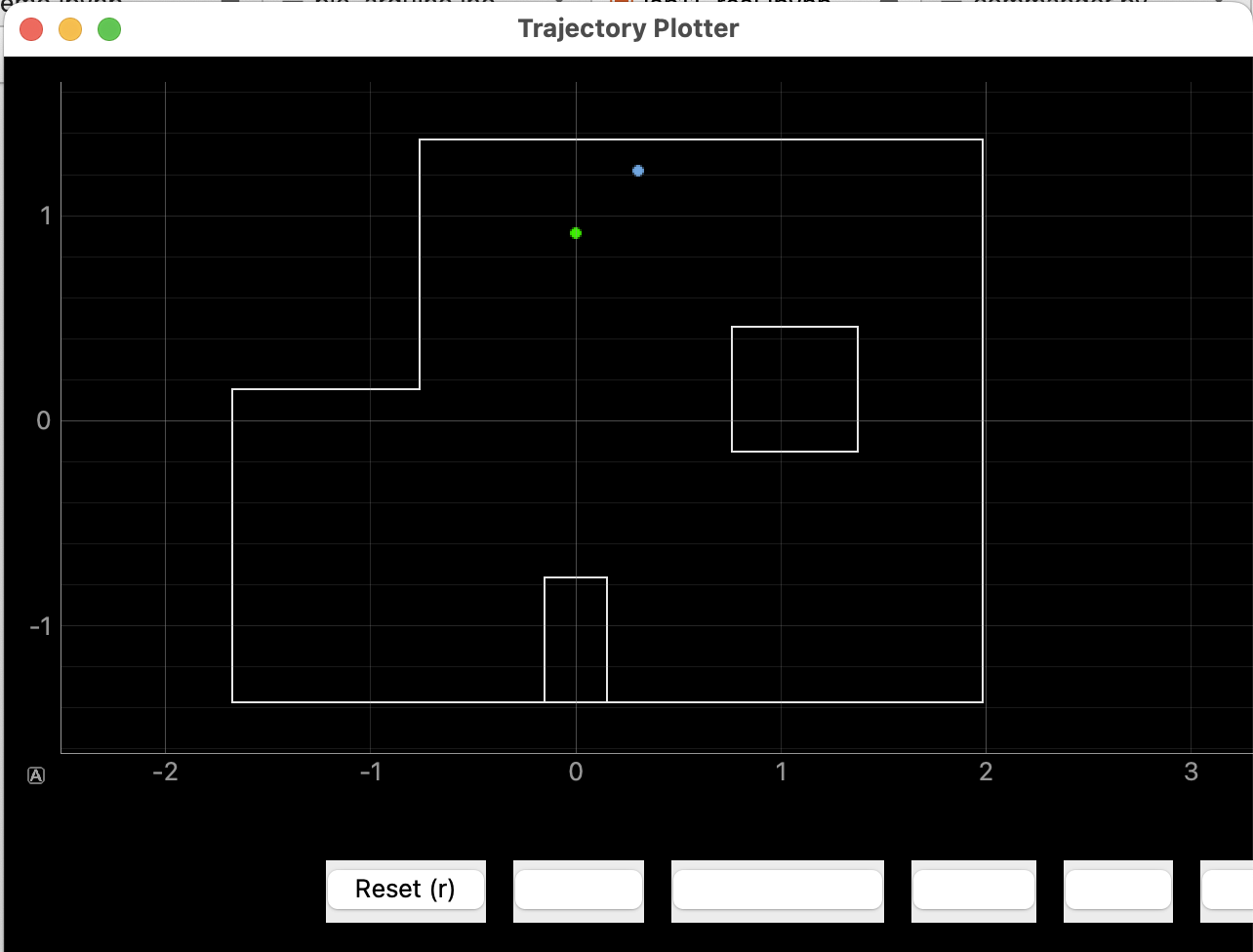

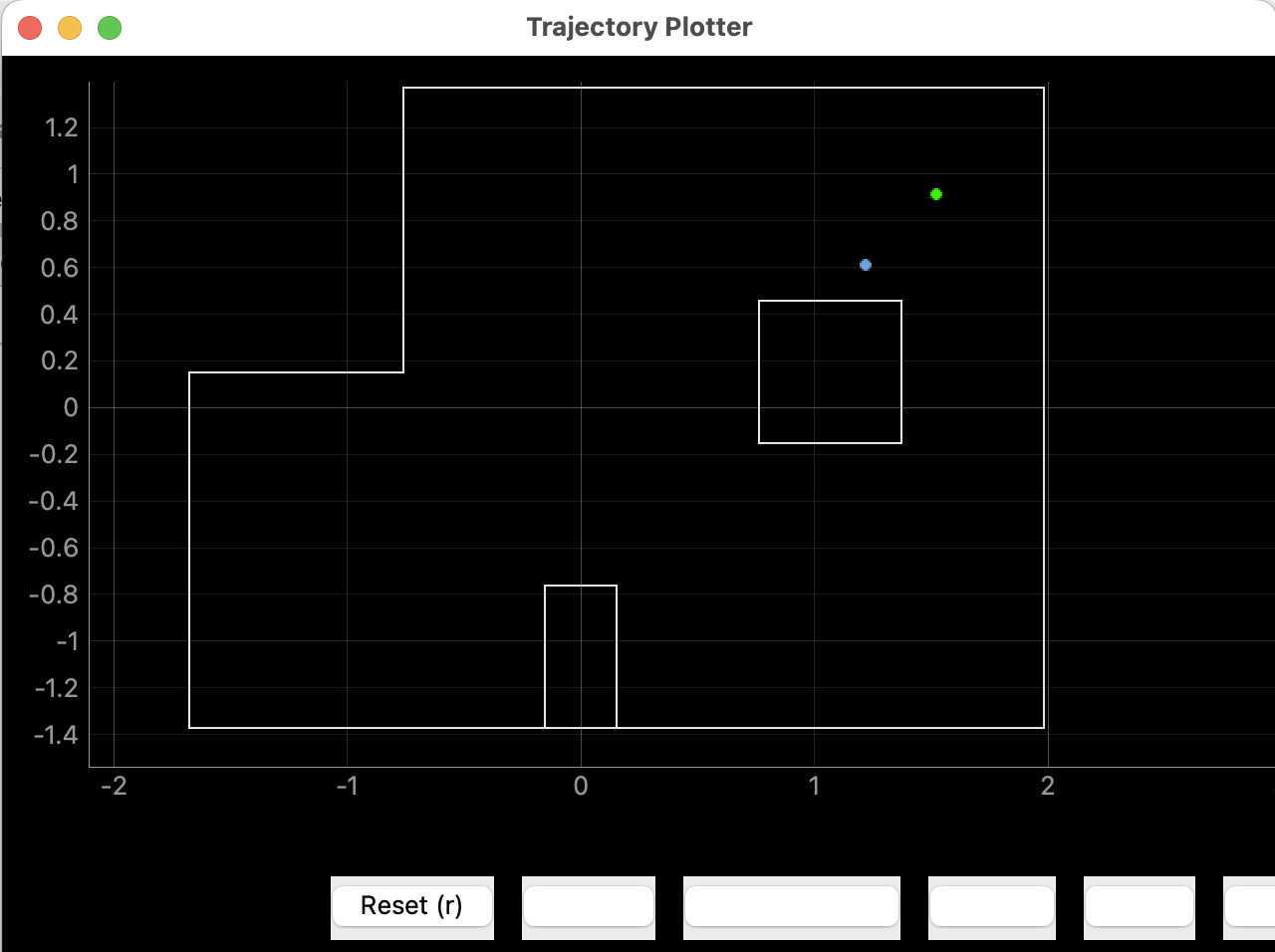

(5,3)

This point was harder to localize, and I was able to get mixed results after many tries. However, there were many runs

where I successfully collected and constructed a polar plot of the 18 sensor data points just to have the localization

process return a completely wrong prediction with high confidence. One change that helped the localization process to

return the correct prediction was to manually remove some data points which should be farther away but somehow recorded

to be much closer to the robot due to faulty ToF sensor readings. After making these points appear farther away as expectd,

the localization performed better.

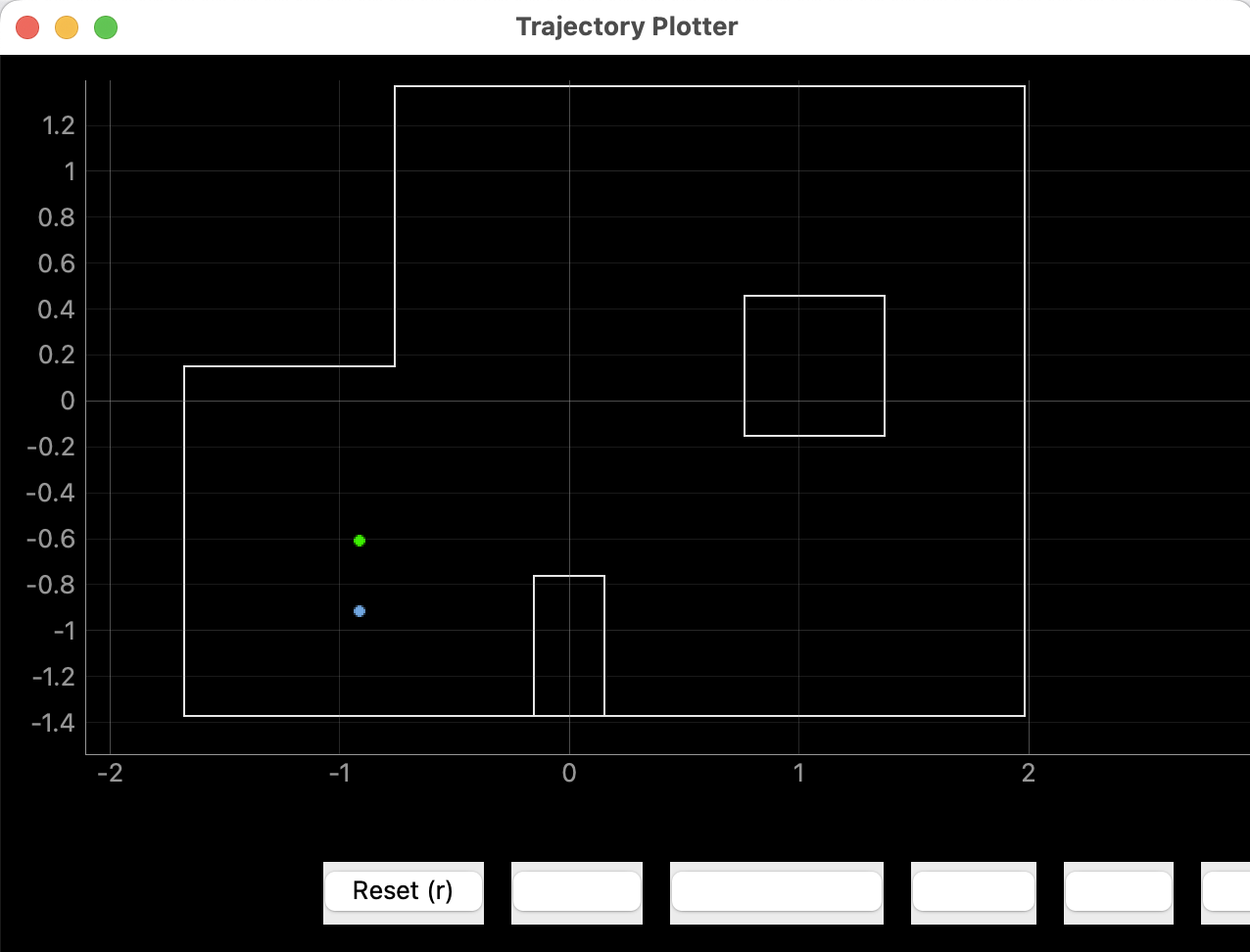

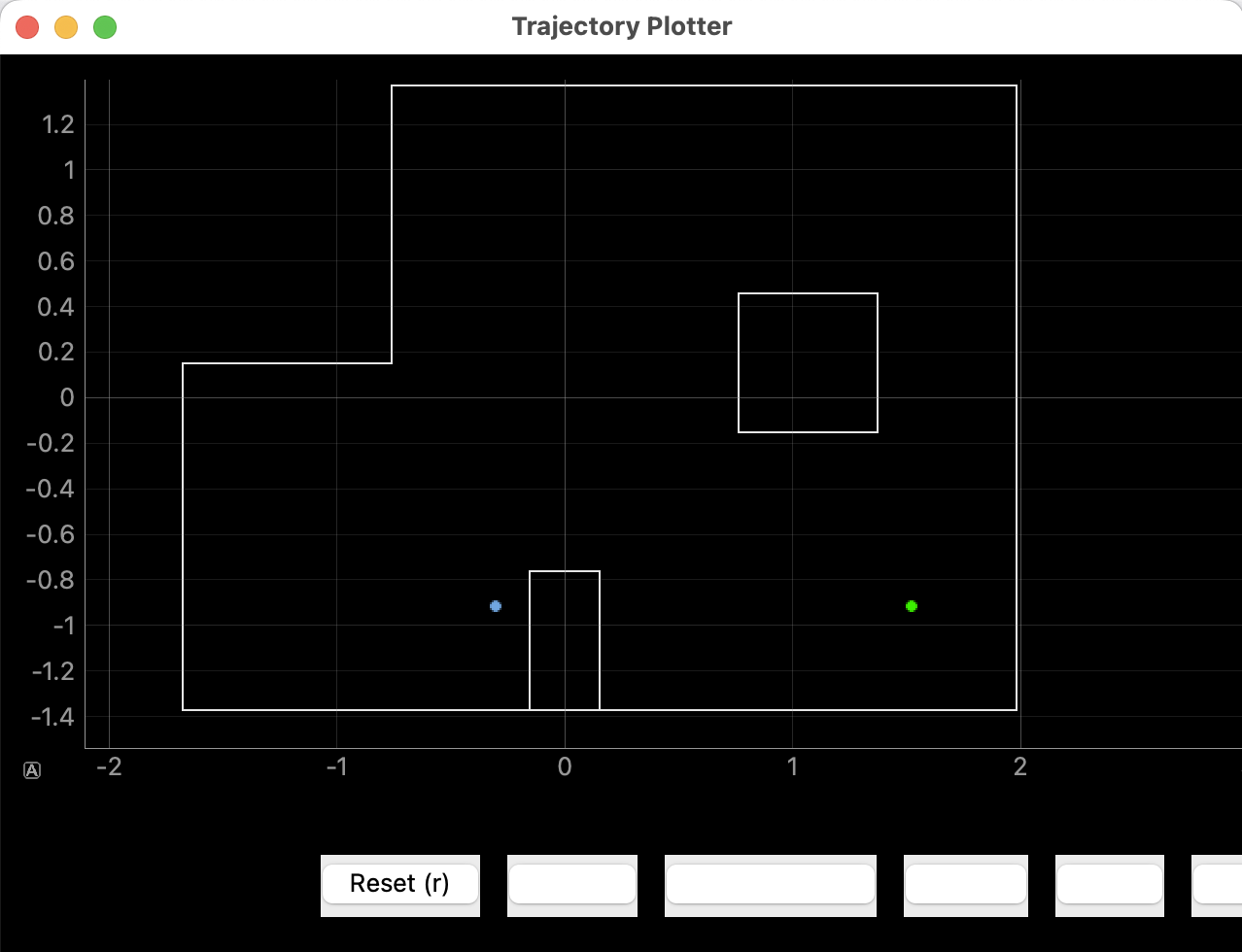

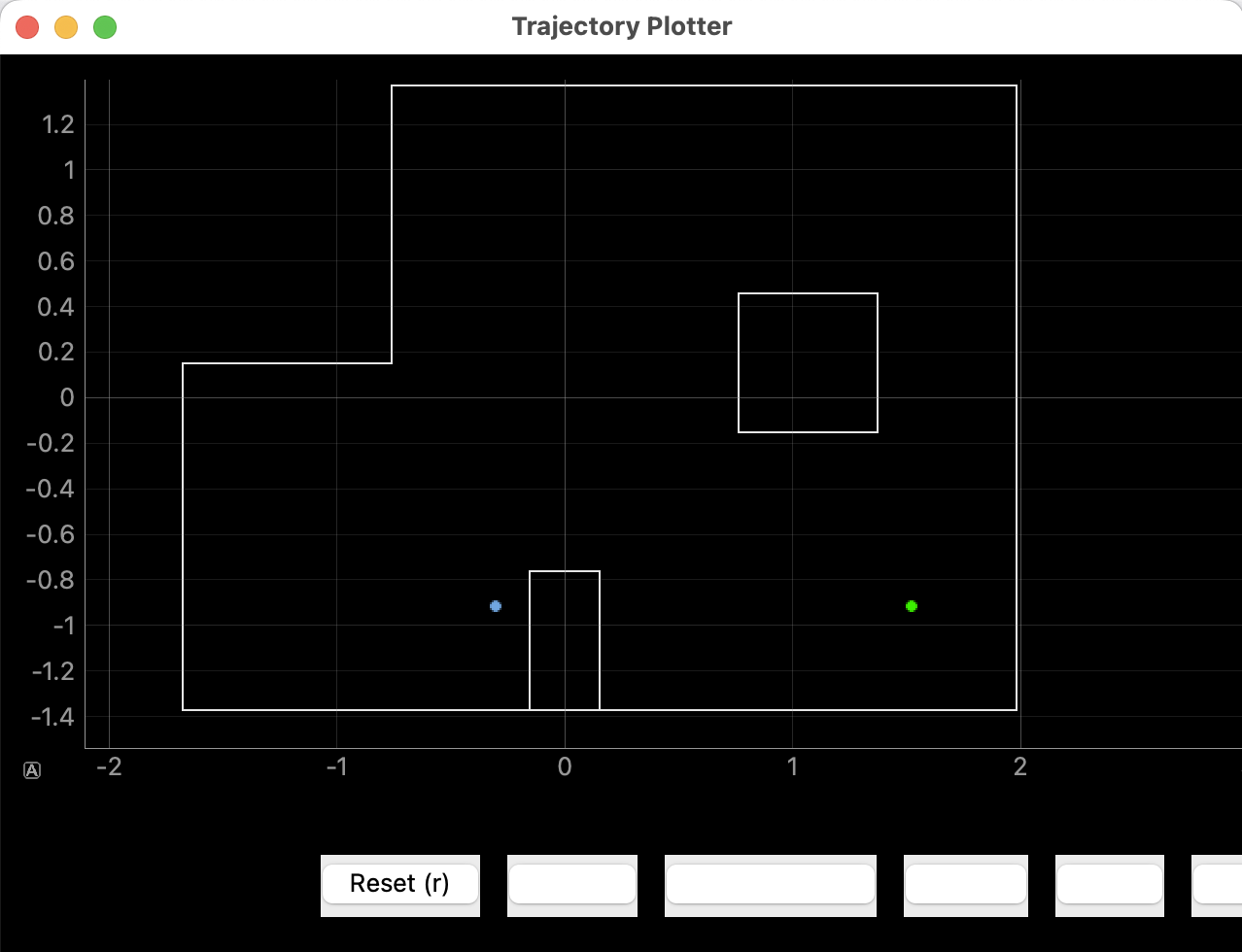

(5,-3)

Lastly, it was very difficult for me to localize this point. After many attempts, the localization process kept mistaking

(5,-3) for a similar point farther to the left. Since both points have a wall to the right and bottom sides and are more

open on the other sides, the localization process may have mistaken these two wall features. In addition, my ToF sensors

are not extremely reliable and sometimes returned faulty values or sensed objects that weren't there which made it hard

to accurately localize.